HANA News Blog

Beware of cloud costs

Unforeseen cloud cost increases

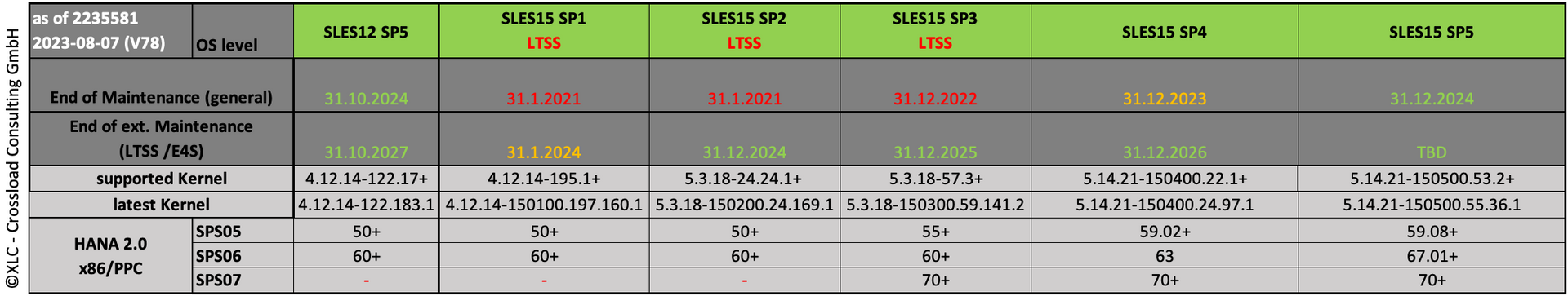

The TCO calculation of cloud costs is a moving target. In the past, all hyperscalers have increased their prices or, like other cloud service providers such as SAP, have directly included an annual increase in the contract. The focus is usually directly on the services consumed, but associated costs, which are still small today and have never been on the radar, can explode. This is the case if the providers have changed their licensing/subscription model. Most recent case to name is Broadcom/VMware but now also another vendor announced a change: RedHat.

Most SAP systems are still running on SUSE and as far as I know also all RISE (+GROW) with SAP systems (public+private). In the past more and more customers changed the OS strategy due to lower/equal costs and RHEL features. They migrated their systems to RHEL.

RedHat announced back in January this year that the costs for cloud partners will be changed effective April 1, 2024. They called it scalable pricing. The previous pricing structure featured a two-tiered system that categorized VMs as either "small" or "large" based on the number of cores, or vCPUs, with fixed prices assigned to each category regardless of the VM's actual size. The duration of VM allocation determined subscription fees, as the number of cores or vCPUs did not influence the pricing, resulting in a capped cost for RHEL subscriptions.

Old RHEL Subscription model categories

- Red Hat Enterprise Linux Server Small Virtual Node (1-4 vCPUs or Cores)

- Red Hat Enterprise Linux Server Large Virtual Node (5 or more vCPUs or Cores)

This means the new price is depending on the number of vCPUs. Unlike before, the cost of a subscription will no longer be capped. Now some of you remember the chaos of the socket subscription or the oracle licensing model incl. the analogy of the parking spots. This means a physical core costs as much as the virtual core. Ok, fair enough if I use more cores I have to pay more. But this means the costs can also be lower for systems with less vCPUs, right? It can be a good thing, this new fancy "scalable pricing". So, when the costs are dropping and rising and who is affected?

Redhat:

"In general, we anticipate that the new RHEL pricing to cloud partners will be lower than the current pricing for small VM/instance sizes; at parity for some small and medium VM/instance sizes; and potentially higher than the current pricing for large and very large VM/instance sizes."

New RHEL Subscription model categories:

- Red Hat Enterprise Linux Server Small Virtual Node (1-8 vCPUs or Cores)

- Red Hat Enterprise Linux Server Medium Virtual Node (9 - 128 vCPUs or Cores)

- Red Hat Enterprise Linux Server Large Virtual Node (129 or more vCPUs or Cores)

"Any new product (such as a new instance type, new instance size, new region, etc.) launched after July 1, 2024, will use the new RHEL pricing. Therefore, any new rates (such as new regions or new instance types) launched after July 1, 2024, will be on the new RHEL pricing, even if the Savings Plans were purchased prior to July 1, 2024."

"In response to Red Hat’s price changes, Azure will also be rolling out price changes for all Red Hat Enterprise Linux instances. These changes will start occurring on April 1, 2024, with price decreases for Red Hat Enterprise Linux and RHEL for SAP Business Applications licenses for vCPU sizes less than 12. All other price updates will be effective July 1, 2024."

"On January 26, 2024, Red Hat announced a price model update on RHEL and RHEL for SAP for all Cloud providers that scales image subscription costs according to vCPU count. As a result, starting July 1, 2024, any active commitments for RHEL and RHEL for SAP licenses will be canceled and will not be charged for the remainder of the commitment's term duration."

This means with the receiving of the July invoice in August the first customer took notice of the change. The smart cloud architects who considered the usage of cloud Finops noticed it earlier ;)

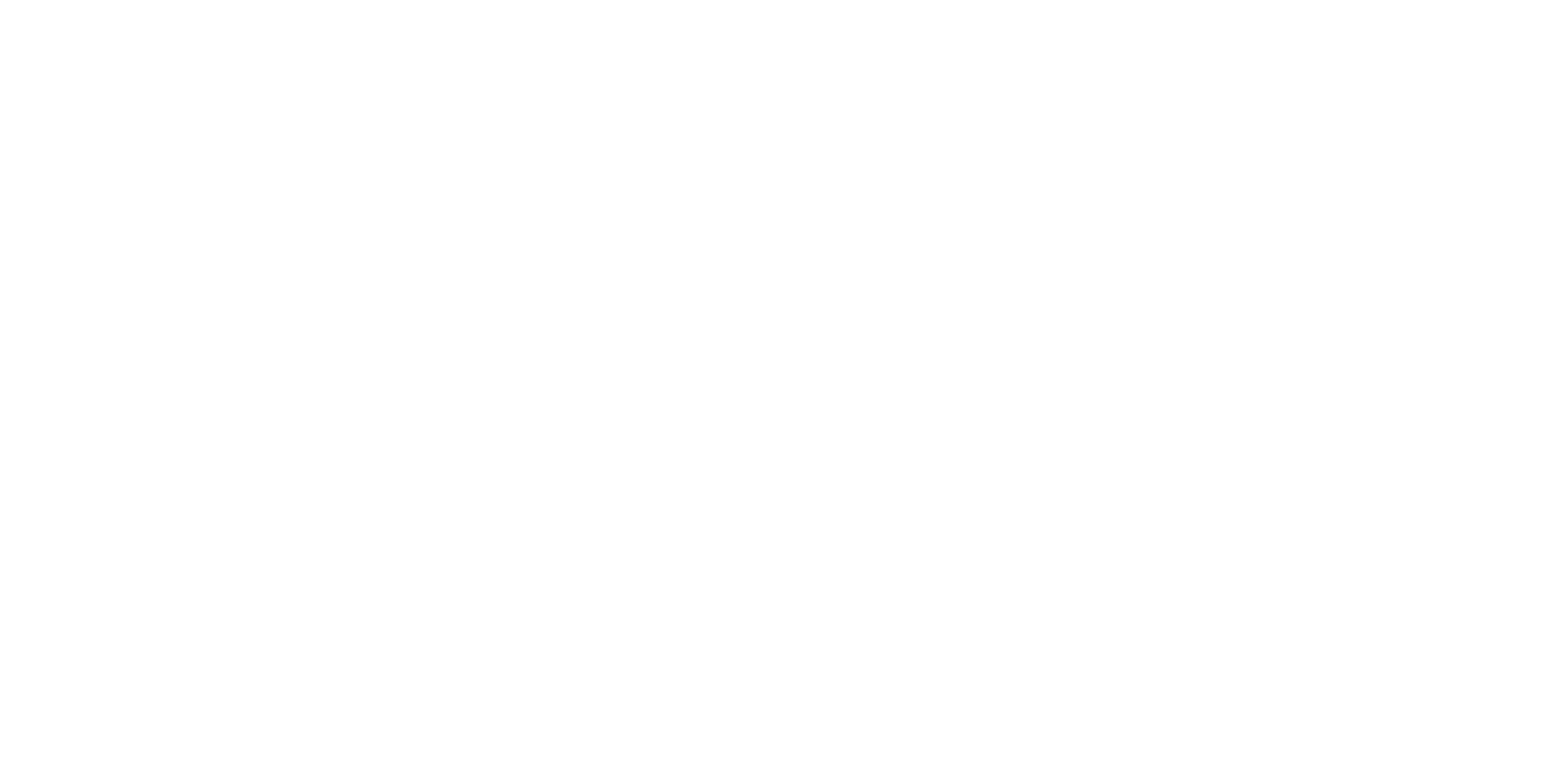

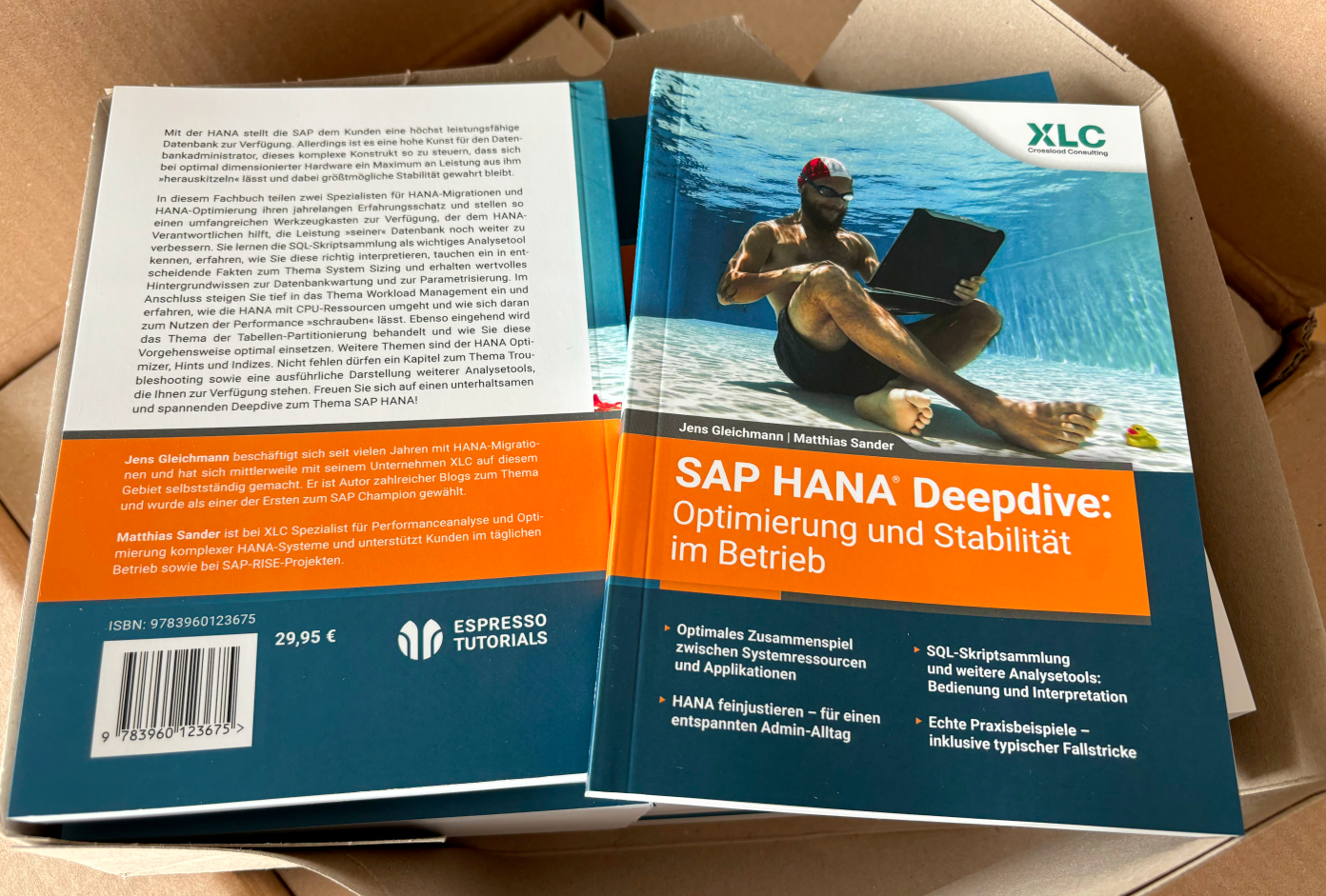

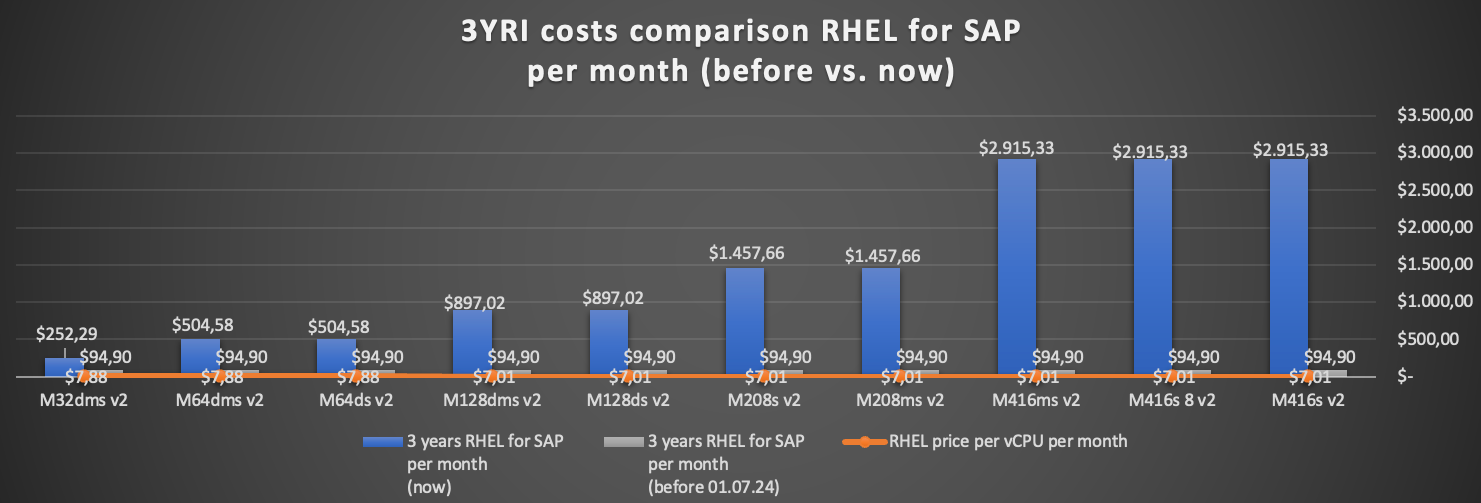

Let's have a look at the MS Azure pricing calculator, because they still have the most market share. With 12 or less vCPUs you will save money. But what does it mean for customers running SAP HANA instances, due to the fact that the smallest MS Azure instance is 20vCPUs? The cost of RHEL for SAP was previously $94.90 (constant line at the bottom of the graph) per month for all certified HANA instances.

MS Azure: RHEL price per certified HANA SKU [source: MS price calc: europe west / germany west central]

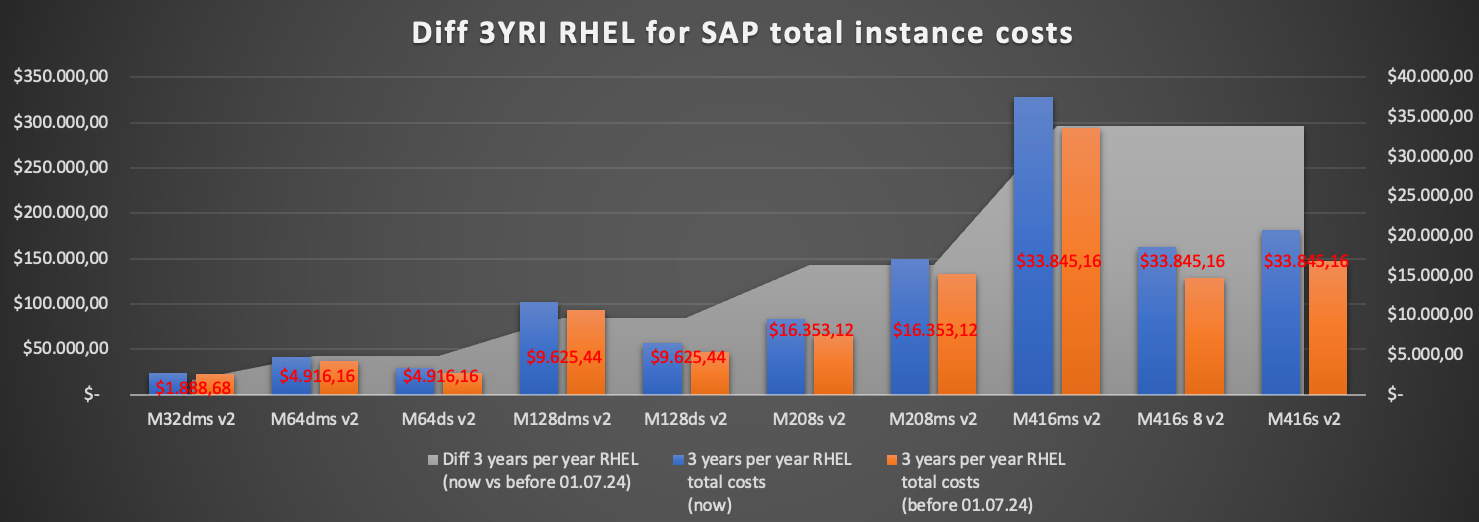

If you are running large instances with 128 and more vCPUs you will see that the costs are differ with more than $10,000 per month! If you are using large sandboxes or features like HANA system replication (HSR), you can multiple this additional costs.

MS Azure: Difference of 3 years reserved instances (3YRI) RHEL for SAP total instance costs [source: MS price calc: europe west / germany west central]

If we drill it down to the RHEL only costs per month, we can also the see the price per vCPU and the switch from 64vCPU to 128vCPU price.

MS Azure: Difference of 3 years reserved instances (3YRI) RHEL for SAP costs per month (before vs. now) [source: MS price calc: europe west / germany west central]

MS Azure: total RHEL pricing per SKU with costs per vCPU [source: MS price calc: europe west / germany west central]

Summary

For those using RHEL for small instances < 12 vCPUs the new RHEL pricing model is a saving. For SAP customers with larger instances it is a nightmare with unplanned additional costs which take directly effect by July 1, 2024.

Paying the same price for a physical core as for a virtual core is a simple calculation, but it does not have the same value. Anyone using multithreading will be penalized more heavily, and once again the customer will question whether Intel is worth the effort when comparing the cost and the performance gained.

Are customer now stop migrating to RHEL in cloud projects due to this heavy price difference?

Is it possible to get some RHEL volume discount?

The previous TCO calculation can be thrown in the trash.

Strange findings:

- price calculators of Google and MS Azure are not up-to-date with the new RHEL pricing model

- if you choose RHEL for SAP with HA option you get the old price of $87,60 for <= 8vCPUs and $204,40 for larger instances in the price calculator of MS Azure

- MS Azure is calculating the large virtual node price ($7.01 per vCPU) for the 128vCPU SKUs which should have the medium virtual node price ($7.88 per vCPU) by definition

Options:

- AWS and may be some others can provide RHEL volume discounts in exchange for an annual spend commitment. Please work with your Technical Account Manager to assess if you qualify.

- Resize your instances which do currently not need the number of vCPUs

- migrate to SUSE (but sure SUSE will also adapt their costs in the near future - just a matter of time)

SAP HANA News by XLC